复制项目

This commit is contained in:

42

docs/contrib/README.md

Normal file

42

docs/contrib/README.md

Normal file

@@ -0,0 +1,42 @@

|

||||

# Contrib Documentation Index

|

||||

|

||||

## 📚 General Information

|

||||

- [📄 README](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/README.md) - General introduction to the contribution documentation.

|

||||

- [📑 Development Guide](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/development.md) - Guidelines for setting up a development environment.

|

||||

|

||||

## 🛠 Setup and Installation

|

||||

- [🌍 Environment Setup](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/environment.md) - Instructions on setting up the development environment.

|

||||

- [🐳 Docker Installation Guide](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/install-docker.md) - Steps to install Docker for container management.

|

||||

- [🔧 OpenIM Linux System Installation](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/install-openim-linux-system.md) - Guide for installing OpenIM on a Linux system.

|

||||

|

||||

## 💻 Development Practices

|

||||

- [👨💻 Code Conventions](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/code-conventions.md) - Coding standards to follow for consistency.

|

||||

- [📐 Directory Structure](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/directory.md) - Explanation of the repository's directory layout.

|

||||

- [🔀 Git Workflow](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/git-workflow.md) - The workflow for using Git in this project (note the file extension error).

|

||||

- [💾 GitHub Workflow](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/github-workflow.md) - Workflow guidelines for GitHub.

|

||||

|

||||

## 🧪 Testing and Deployment

|

||||

- [⚙️ CI/CD Actions](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/cicd-actions.md) - Continuous integration and deployment configurations.

|

||||

- [🚀 Offline Deployment](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/offline-deployment.md) - How to deploy the application offline.

|

||||

|

||||

## 🔧 Utilities and Tools

|

||||

- [📦 Protoc Tools](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/protoc-tools.md) - Protobuf compiler-related utilities.

|

||||

- [🔨 Utility Go](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/util-go.md) - Go utilities and helper functions.

|

||||

- [🛠 Makefile Utilities](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/util-makefile.md) - Makefile scripts for automation.

|

||||

- [📜 Script Utilities](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/util-scripts.md) - Utility scripts for development.

|

||||

|

||||

## 📋 Standards and Conventions

|

||||

- [🚦 Commit Guidelines](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/commit.md) - Standards for writing commit messages.

|

||||

- [✅ Testing Guide](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/test.md) - Guidelines and conventions for writing tests.

|

||||

- [📈 Versioning](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/version.md) - Version management for the project.

|

||||

|

||||

## 🖼 Additional Resources

|

||||

- [🌐 API Reference](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/api.md) - Detailed API documentation.

|

||||

- [📚 Go Code Standards](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/go-code.md) - Go programming language standards.

|

||||

- [🖼 Image Guidelines](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/images.md) - Guidelines for image assets.

|

||||

|

||||

## 🐛 Troubleshooting

|

||||

- [🔍 Error Code Reference](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/error-code.md) - List of error codes and their meanings.

|

||||

- [🐚 Bash Logging](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/bash-log.md) - Logging standards for bash scripts.

|

||||

- [📈 Logging Conventions](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/logging.md) - Conventions for application logging.

|

||||

- [🛠 Local Actions Guide](https://github.com/openimsdk/open-im-server-deploy/tree/main/docs/contrib/local-actions.md) - How to perform local actions for troubleshooting.

|

||||

5

docs/contrib/api.md

Normal file

5

docs/contrib/api.md

Normal file

@@ -0,0 +1,5 @@

|

||||

## Interface Standards

|

||||

|

||||

Our project, OpenIM, adheres to the [OpenAPI 3.0](https://spec.openapis.org/oas/latest.html) interface standards.

|

||||

|

||||

> Chinese translation: [OpenAPI Specification Chinese Translation](https://fishead.gitbook.io/openapi-specification-zhcn-translation/3.0.0.zhcn)

|

||||

49

docs/contrib/bash-log.md

Normal file

49

docs/contrib/bash-log.md

Normal file

@@ -0,0 +1,49 @@

|

||||

## OpenIM Logging System: Design and Usage

|

||||

|

||||

**PATH:** `scripts/lib/logging.sh`

|

||||

|

||||

|

||||

|

||||

### Introduction

|

||||

|

||||

OpenIM, an intricate project, requires a robust logging mechanism to diagnose issues, maintain system health, and provide insights. A custom-built logging system embedded within OpenIM ensures consistent and structured logs. Let's delve into the design of this logging system and understand its various functions and their usage scenarios.

|

||||

|

||||

### Design Overview

|

||||

|

||||

1. **Initialization**: The system begins by determining the verbosity level through the `OPENIM_VERBOSE` variable. If it's not set, a default value of 5 is assigned. This verbosity level dictates the depth of the log details.

|

||||

2. **Log File Setup**: Logs are stored in the directory specified by `OPENIM_OUTPUT`. If this variable isn't explicitly set, it defaults to the `_output` directory relative to the script location. Each log file is named based on the date to facilitate easy identification.

|

||||

3. **Logging Function**: The `echo_log()` function plays a pivotal role by writing messages to both the console (stdout) and the log file.

|

||||

4. **Logging to a file**: The `echo_log()` function writes to the log file by appending the message to the file. It also adds a timestamp to the message. path: `_output/logs/*`, Enable logging by default. Set to false to disable. If you wish to turn off output to log files set `export ENABLE_LOGGING=flase`.

|

||||

|

||||

### Key Functions & Their Usages

|

||||

|

||||

1. **Error Handling**:

|

||||

- `openim::log::errexit()`: Activated when a command exits with an error. It prints a call tree showing the sequence of functions leading to the error and then calls `openim::log::error_exit()` with relevant information.

|

||||

- `openim::log::install_errexit()`: Sets up the trap for catching errors and ensures that the error handler (`errexit`) gets propagated to various script constructs like functions, expansions, and subshells.

|

||||

2. **Logging Levels**:

|

||||

- `openim::log::error()`: Logs error messages with a timestamp. The log message starts with '!!!' to indicate its severity.

|

||||

- `openim::log::info()`: Provides informational messages. The display of these messages is governed by the verbosity level (`OPENIM_VERBOSE`).

|

||||

- `openim::log::progress()`: Designed for logging progress messages or creating progress bars.

|

||||

- `openim::log::status()`: Logs status messages with a timestamp, prefixing each entry with '+++' for easy identification.

|

||||

- `openim::log::success()`: Highlights successful operations with a bright green prefix. It's ideal for visually signifying operations that completed successfully.

|

||||

3. **Exit and Stack Trace**:

|

||||

- `openim::log::error_exit()`: Logs an error message, dumps the call stack, and exits the script with a specified exit code.

|

||||

- `openim::log::stack()`: Prints out a stack trace, showing the call hierarchy leading to the point where this function was invoked.

|

||||

4. **Usage Information**:

|

||||

- `openim::log::usage() & openim::log::usage_from_stdin()`: Both functions provide a mechanism to display usage instructions. The former accepts arguments directly, while the latter reads them from stdin.

|

||||

5. **Test Function**:

|

||||

- `openim::log::test_log()`: This function is a test suite to verify that all logging functions are operating as expected.

|

||||

|

||||

### Usage Scenario

|

||||

|

||||

Imagine a situation where an OpenIM operation fails, and you need to ascertain the cause. With the logging system in place, you can:

|

||||

|

||||

- Check the log file for the specific day to find error messages with the '!!!' prefix.

|

||||

- View the call tree and stack trace to trace back the sequence of operations leading to the failure.

|

||||

- Use the verbosity level to filter out unnecessary details and focus on the crux of the issue.

|

||||

|

||||

This systematic and structured approach greatly simplifies the debugging process, making system maintenance more efficient.

|

||||

|

||||

### Conclusion

|

||||

|

||||

OpenIM's logging system is a testament to the importance of structured and detailed logging in complex projects. By using this logging mechanism, developers and system administrators can streamline troubleshooting and ensure the seamless operation of the OpenIM project.

|

||||

129

docs/contrib/cicd-actions.md

Normal file

129

docs/contrib/cicd-actions.md

Normal file

@@ -0,0 +1,129 @@

|

||||

# Continuous Integration and Automation

|

||||

|

||||

Every change on the OpenIM repository, either made through a pull request or direct push, triggers the continuous integration pipelines defined within the same repository. Needless to say, all the OpenIM contributions can be merged until all the checks pass (AKA having green builds).

|

||||

|

||||

- [Continuous Integration and Automation](#continuous-integration-and-automation)

|

||||

- [CI Platforms](#ci-platforms)

|

||||

- [GitHub Actions](#github-actions)

|

||||

- [Running locally](#running-locally)

|

||||

|

||||

## CI Platforms

|

||||

|

||||

Currently, there are two different platforms involved in running the CI processes:

|

||||

|

||||

- GitHub actions

|

||||

- Drone pipelines on CNCF infrastructure

|

||||

|

||||

### GitHub Actions

|

||||

|

||||

All the existing GitHub Actions are defined as YAML files under the `.github/workflows` directory. These can be grouped into:

|

||||

|

||||

- **PR Checks**. These actions run all the required validations upon PR creation and update. Covering the DCO compliance check, `x86_64` test batteries (unit, integration, smoke), and code coverage.

|

||||

- **Repository automation**. Currently, it only covers issues and epic grooming.

|

||||

|

||||

Everything runs on GitHub's provided runners; thus, the tests are limited to run in `x86_64` architectures.

|

||||

|

||||

|

||||

## Running locally

|

||||

|

||||

A contributor should verify their changes locally to speed up the pull request process. Fortunately, all the CI steps can be on local environments, except for the publishing ones, through either of the following methods:

|

||||

|

||||

**User Makefile:**

|

||||

```bash

|

||||

root@PS2023EVRHNCXG:~/workspaces/openim/Open-IM-Server# make help 😊

|

||||

|

||||

Usage: make <TARGETS> <OPTIONS> ...

|

||||

|

||||

Targets:

|

||||

|

||||

all Run tidy, gen, add-copyright, format, lint, cover, build 🚀

|

||||

build Build binaries by default 🛠️

|

||||

multiarch Build binaries for multiple platforms. See option PLATFORMS. 🌍

|

||||

tidy tidy go.mod ✨

|

||||

vendor vendor go.mod 📦

|

||||

style code style -> fmt,vet,lint 💅

|

||||

fmt Run go fmt against code. ✨

|

||||

vet Run go vet against code. ✅

|

||||

lint Check syntax and styling of go sources. ✔️

|

||||

format Gofmt (reformat) package sources (exclude vendor dir if existed). 🔄

|

||||

test Run unit test. 🧪

|

||||

cover Run unit test and get test coverage. 📊

|

||||

updates Check for updates to go.mod dependencies 🆕

|

||||

imports task to automatically handle import packages in Go files using goimports tool 📥

|

||||

clean Remove all files that are created by building. 🗑️

|

||||

image Build docker images for host arch. 🐳

|

||||

image.multiarch Build docker images for multiple platforms. See option PLATFORMS. 🌍🐳

|

||||

push Build docker images for host arch and push images to registry. 📤🐳

|

||||

push.multiarch Build docker images for multiple platforms and push images to registry. 🌍📤🐳

|

||||

tools Install dependent tools. 🧰

|

||||

gen Generate all necessary files. 🧩

|

||||

swagger Generate swagger document. 📖

|

||||

serve-swagger Serve swagger spec and docs. 🚀📚

|

||||

verify-copyright Verify the license headers for all files. ✅

|

||||

add-copyright Add copyright ensure source code files have license headers. 📄

|

||||

release release the project 🎉

|

||||

help Show this help info. ℹ️

|

||||

help-all Show all help details info. ℹ️📚

|

||||

|

||||

Options:

|

||||

|

||||

DEBUG Whether or not to generate debug symbols. Default is 0. ❓

|

||||

|

||||

BINS Binaries to build. Default is all binaries under cmd. 🛠️

|

||||

This option is available when using: make {build}(.multiarch) 🧰

|

||||

Example: make build BINS="openim-api openim_cms_api".

|

||||

|

||||

PLATFORMS Platform to build for. Default is linux_arm64 and linux_amd64. 🌍

|

||||

This option is available when using: make {build}.multiarch 🌍

|

||||

Example: make multiarch PLATFORMS="linux_s390x linux_mips64

|

||||

linux_mips64le darwin_amd64 windows_amd64 linux_amd64 linux_arm64".

|

||||

|

||||

V Set to 1 enable verbose build. Default is 0. 📝

|

||||

```

|

||||

|

||||

|

||||

How to Use Makefile to Help Contributors Build Projects Quickly 😊

|

||||

|

||||

The `make help` command is a handy tool that provides useful information on how to utilize the Makefile effectively. By running this command, contributors will gain insights into various targets and options available for building projects swiftly.

|

||||

|

||||

Here's a breakdown of the targets and options provided by the Makefile:

|

||||

|

||||

**Targets 😃**

|

||||

|

||||

1. `all`: This target runs multiple tasks like `tidy`, `gen`, `add-copyright`, `format`, `lint`, `cover`, and `build`. It ensures comprehensive project building.

|

||||

2. `build`: The primary target that compiles binaries by default. It is particularly useful for creating the necessary executable files.

|

||||

3. `multiarch`: A target that builds binaries for multiple platforms. Contributors can specify the desired platforms using the `PLATFORMS` option.

|

||||

4. `tidy`: This target cleans up the `go.mod` file, ensuring its consistency.

|

||||

5. `vendor`: A target that updates the project dependencies based on the `go.mod` file.

|

||||

6. `style`: Checks the code style using tools like `fmt`, `vet`, and `lint`. It ensures a consistent coding style throughout the project.

|

||||

7. `fmt`: Formats the code using the `go fmt` command, ensuring proper indentation and formatting.

|

||||

8. `vet`: Runs the `go vet` command to identify common errors in the code.

|

||||

9. `lint`: Validates the syntax and styling of Go source files using a linter.

|

||||

10. `format`: Reformats the package sources using `gofmt`. It excludes the vendor directory if it exists.

|

||||

11. `test`: Executes unit tests to ensure the functionality and stability of the code.

|

||||

12. `cover`: Performs unit tests and calculates the test coverage of the code.

|

||||

13. `updates`: Checks for updates to the project's dependencies specified in the `go.mod` file.

|

||||

14. `imports`: Automatically handles import packages in Go files using the `goimports` tool.

|

||||

15. `clean`: Removes all files generated during the build process, effectively cleaning up the project directory.

|

||||

16. `image`: Builds Docker images for the host architecture.

|

||||

17. `image.multiarch`: Similar to the `image` target, but it builds Docker images for multiple platforms. Contributors can specify the desired platforms using the `PLATFORMS` option.

|

||||

18. `push`: Builds Docker images for the host architecture and pushes them to a registry.

|

||||

19. `push.multiarch`: Builds Docker images for multiple platforms and pushes them to a registry. Contributors can specify the desired platforms using the `PLATFORMS` option.

|

||||

20. `tools`: Installs the necessary tools or dependencies required by the project.

|

||||

21. `gen`: Generates all the required files automatically.

|

||||

22. `swagger`: Generates the swagger document for the project.

|

||||

23. `serve-swagger`: Serves the swagger specification and documentation.

|

||||

24. `verify-copyright`: Verifies the license headers for all project files.

|

||||

25. `add-copyright`: Adds copyright headers to the source code files.

|

||||

26. `release`: Releases the project, presumably for distribution.

|

||||

27. `help`: Displays information about available targets and options.

|

||||

28. `help-all`: Shows detailed information about all available targets and options.

|

||||

|

||||

**Options 😄**

|

||||

|

||||

1. `DEBUG`: A boolean option that determines whether or not to generate debug symbols. The default value is 0 (false).

|

||||

2. `BINS`: Specifies the binaries to build. By default, it builds all binaries under the `cmd` directory. Contributors can provide a list of specific binaries using this option.

|

||||

3. `PLATFORMS`: Specifies the platforms to build for. The default platforms are `linux_arm64` and `linux_amd64`. Contributors can specify multiple platforms by providing a space-separated list of platform names.

|

||||

4. `V`: A boolean option that enables verbose build output when set to 1 (true). The default value is 0 (false).

|

||||

|

||||

With these targets and options in place, contributors can efficiently build projects using the Makefile. Happy coding! 🚀😊

|

||||

88

docs/contrib/code-conventions.md

Normal file

88

docs/contrib/code-conventions.md

Normal file

@@ -0,0 +1,88 @@

|

||||

# Code conventions

|

||||

|

||||

- [Code conventions](#code-conventions)

|

||||

- [POSIX shell](#posix-shell)

|

||||

- [Go](#go)

|

||||

- [OpenIM Naming Conventions Guide](#openim-naming-conventions-guide)

|

||||

- [1. General File Naming](#1-general-file-naming)

|

||||

- [2. Special File Types](#2-special-file-types)

|

||||

- [a. Script and Markdown Files](#a-script-and-markdown-files)

|

||||

- [b. Uppercase Markdown Documentation](#b-uppercase-markdown-documentation)

|

||||

- [3. Directory Naming](#3-directory-naming)

|

||||

- [4. Configuration Files](#4-configuration-files)

|

||||

- [Best Practices](#best-practices)

|

||||

- [Directory and File Conventions](#directory-and-file-conventions)

|

||||

- [Testing conventions](#testing-conventions)

|

||||

|

||||

## POSIX shell

|

||||

|

||||

- [Style guide](https://google.github.io/styleguide/shell.xml)

|

||||

|

||||

## Go

|

||||

|

||||

- [Go Code Review Comments](https://github.com/golang/go/wiki/CodeReviewComments)

|

||||

- [Effective Go](https://golang.org/doc/effective_go.html)

|

||||

- Know and avoid [Go landmines](https://gist.github.com/lavalamp/4bd23295a9f32706a48f)

|

||||

- Comment your code.

|

||||

- [Go's commenting conventions](http://blog.golang.org/godoc-documenting-go-code)

|

||||

- If reviewers ask questions about why the code is the way it is, that's a sign that comments might be helpful.

|

||||

- Command-line flags should use dashes, not underscores

|

||||

- Naming

|

||||

- Please consider package name when selecting an interface name, and avoid redundancy. For example, `storage.Interface` is better than `storage.StorageInterface`.

|

||||

- Do not use uppercase characters, underscores, or dashes in package names.

|

||||

- Please consider parent directory name when choosing a package name. For example, `pkg/controllers/autoscaler/foo.go` should say `package autoscaler` not `package autoscalercontroller`.

|

||||

- Unless there's a good reason, the `package foo` line should match the name of the directory in which the `.go` file exists.

|

||||

- Importers can use a different name if they need to disambiguate.Ⓜ️

|

||||

|

||||

## OpenIM Naming Conventions Guide

|

||||

|

||||

Welcome to the OpenIM Naming Conventions Guide. This document outlines the best practices and standardized naming conventions that our project follows to maintain clarity, consistency, and alignment with industry standards, specifically taking cues from the Google Naming Conventions.

|

||||

|

||||

### 1. General File Naming

|

||||

|

||||

Files within the OpenIM project should adhere to the following rules:

|

||||

|

||||

+ Both hyphens (`-`) and underscores (`_`) are acceptable in file names.

|

||||

+ Underscores (`_`) are preferred for general files to enhance readability and compatibility.

|

||||

+ For example: `data_processor.py`, `user_profile_generator.go`

|

||||

|

||||

### 2. Special File Types

|

||||

|

||||

#### a. Script and Markdown Files

|

||||

|

||||

+ Bash scripts and Markdown files should use hyphens (`-`) to facilitate better searchability and compatibility in web browsers.

|

||||

+ For example: `deploy-script.sh`, `project-overview.md`

|

||||

|

||||

#### b. Uppercase Markdown Documentation

|

||||

|

||||

+ Markdown files with uppercase names, such as `README`, may include underscores (`_`) to separate words if necessary.

|

||||

+ For example: `README_SETUP.md`, `CONTRIBUTING_GUIDELINES.md`

|

||||

|

||||

### 3. Directory Naming

|

||||

|

||||

+ Directories must use hyphens (`-`) exclusively to maintain a clean and organized file structure.

|

||||

+ For example: `image-assets`, `user-data`

|

||||

|

||||

### 4. Configuration Files

|

||||

|

||||

+ Configuration files, including but not limited to `.yaml` files, should use hyphens (`-`).

|

||||

+ For example: `app-config.yaml`, `logging-config.yaml`

|

||||

|

||||

### Best Practices

|

||||

|

||||

+ Keep names concise but descriptive enough to convey the file's purpose or contents at a glance.

|

||||

+ Avoid using spaces in names; use hyphens or underscores instead to improve compatibility across different operating systems and environments.

|

||||

+ Stick to lowercase naming where possible for consistency and to prevent issues with case-sensitive systems.

|

||||

+ Include version numbers or dates in file names if the file is subject to updates, following the format: `project-plan-v1.2.md` or `backup-2023-03-15.sql`.

|

||||

|

||||

## Directory and File Conventions

|

||||

|

||||

- Avoid generic utility packages. Instead of naming a package "util", choose a name that clearly describes its purpose. For instance, functions related to waiting operations are contained within the `wait` package, which includes methods like `Poll`, fully named as `wait.Poll`.

|

||||

- All filenames, script files, configuration files, and directories should be in lowercase and use dashes (`-`) as separators.

|

||||

- For Go language files, filenames should be in lowercase and use underscores (`_`).

|

||||

- Package names should match their directory names to ensure consistency. For example, within the `openim-api` directory, the Go file should be named `openim-api.go`, following the convention of using dashes for directory names and aligning package names with directory names.

|

||||

|

||||

|

||||

## Testing conventions

|

||||

|

||||

Please refer to [TESTING.md](https://github.com/openimsdk/open-im-server-deploy/tree/main/test/readme) document.

|

||||

9

docs/contrib/commit.md

Normal file

9

docs/contrib/commit.md

Normal file

@@ -0,0 +1,9 @@

|

||||

## Commit Standards

|

||||

|

||||

Our project, OpenIM, follows the [Conventional Commits](https://www.conventionalcommits.org/en/v1.0.0) standards.

|

||||

|

||||

> Chinese translation: [Conventional Commits: A Specification Making Commit Logs More Human and Machine-friendly](https://tool.lu/en_US/article/2ac/preview)

|

||||

|

||||

In addition to adhering to these standards, we encourage all contributors to the OpenIM project to ensure that their commit messages are clear and descriptive. This helps in maintaining a clean and meaningful project history. Each commit message should succinctly describe the changes made and, where necessary, the reasoning behind those changes.

|

||||

|

||||

To facilitate a streamlined process, we also recommend using appropriate commit type based on Conventional Commits guidelines such as `fix:` for bug fixes, `feat:` for new features, and so forth. Understanding and using these conventions helps in generating automatic release notes, making versioning easier, and improving overall readability of commit history.

|

||||

72

docs/contrib/development.md

Normal file

72

docs/contrib/development.md

Normal file

@@ -0,0 +1,72 @@

|

||||

# Development Guide

|

||||

|

||||

Since OpenIM is written in Go, it is fair to assume that the Go tools are all one needs to contribute to this project. Unfortunately, there is a point where this no longer holds true when required to test or build local changes. This document elaborates on the required tooling for OpenIM development.

|

||||

|

||||

- [Development Guide](#development-guide)

|

||||

- [Non-Linux environment prerequisites](#non-linux-environment-prerequisites)

|

||||

- [Windows Setup](#windows-setup)

|

||||

- [macOS Setup](#macos-setup)

|

||||

- [Installing Required Software](#installing-required-software)

|

||||

- [Go](#go)

|

||||

- [Docker](#docker)

|

||||

- [Vagrant](#vagrant)

|

||||

- [Dependency management](#dependency-management)

|

||||

|

||||

## Non-Linux environment prerequisites

|

||||

|

||||

All the test and build scripts within this repository were created to be run on GNU Linux development environments. Due to this, it is suggested to use the virtual machine defined on this repository's [Vagrantfile](https://developer.hashicorp.com/vagrant/docs/vagrantfile) to use them.

|

||||

|

||||

Either way, if one still wants to build and test OpenIM on non-Linux environments, specific setups are to be followed.

|

||||

|

||||

### Windows Setup

|

||||

|

||||

To build OpenIM on Windows is only possible for versions that support Windows Subsystem for Linux (WSL). If the development environment in question has Windows 10, Version 2004, Build 19041 or higher, [follow these instructions to install WSL2](https://docs.microsoft.com/en-us/windows/wsl/install-win10); otherwise, use a Linux Virtual machine instead.

|

||||

|

||||

### macOS Setup

|

||||

|

||||

The shell scripts in charge of the build and test processes rely on GNU utils (i.e. `sed`), [which slightly differ on macOS](https://unix.stackexchange.com/a/79357), meaning that one must make some adjustments before using them.

|

||||

|

||||

First, install the GNU utils:

|

||||

|

||||

```sh

|

||||

brew install coreutils findutils gawk gnu-sed gnu-tar grep make

|

||||

```

|

||||

|

||||

Then update the shell init script (i.e. `.bashrc`) to prepend the GNU Utils to the `$PATH` variable

|

||||

|

||||

```sh

|

||||

GNUBINS="$(find /usr/local/opt -type d -follow -name gnubin -print)"

|

||||

|

||||

for bindir in ${GNUBINS[@]}; do

|

||||

PATH=$bindir:$PATH

|

||||

done

|

||||

|

||||

export PATH

|

||||

```

|

||||

|

||||

## Installing Required Software

|

||||

|

||||

### Go

|

||||

|

||||

It is well known that OpenIM is written in [Go](http://golang.org). Please follow the [Go Getting Started guide](https://golang.org/doc/install) to install and set up the Go tools used to compile and run the test batteries.

|

||||

|

||||

| OpenIM | requires Go |

|

||||

|----------------|-------------|

|

||||

| 2.24 - 3.00 | 1.15 + |

|

||||

| 3.30 + | 1.18 + |

|

||||

|

||||

### Docker

|

||||

|

||||

OpenIM build and test processes development require Docker to run certain steps. [Follow the Docker website instructions to install Docker](https://docs.docker.com/get-docker/) in the development environment.

|

||||

|

||||

### Vagrant

|

||||

|

||||

As described in the [Testing documentation](https://github.com/openimsdk/open-im-server-deploy/tree/main/test/readme), all the smoke tests are run in virtual machines managed by Vagrant. To install Vagrant in the development environment, [follow the instructions from the Hashicorp website](https://www.vagrantup.com/downloads), alongside any of the following hypervisors:

|

||||

|

||||

- [VirtualBox](https://www.virtualbox.org/)

|

||||

- [libvirt](https://libvirt.org/) and the [vagrant-libvirt plugin](https://github.com/vagrant-libvirt/vagrant-libvirt#installation)

|

||||

|

||||

|

||||

## Dependency management

|

||||

|

||||

OpenIM uses [go modules](https://github.com/golang/go/wiki/Modules) to manage dependencies.

|

||||

3

docs/contrib/directory.md

Normal file

3

docs/contrib/directory.md

Normal file

@@ -0,0 +1,3 @@

|

||||

## Catalog Service Interface Specification

|

||||

|

||||

+ [https://github.com/kubecub/go-project-layout](https://github.com/kubecub/go-project-layout)

|

||||

537

docs/contrib/environment.md

Normal file

537

docs/contrib/environment.md

Normal file

@@ -0,0 +1,537 @@

|

||||

# OpenIM ENVIRONMENT CONFIGURATION

|

||||

|

||||

<!-- vscode-markdown-toc -->

|

||||

* 1. [OpenIM Deployment Guide](#OpenIMDeploymentGuide)

|

||||

* 1.1. [Deployment Strategies](#DeploymentStrategies)

|

||||

* 1.2. [Source Code Deployment](#SourceCodeDeployment)

|

||||

* 1.3. [Docker Compose Deployment](#DockerComposeDeployment)

|

||||

* 1.4. [Environment Variable Configuration](#EnvironmentVariableConfiguration)

|

||||

* 1.4.1. [Recommended using environment variables](#Recommendedusingenvironmentvariables)

|

||||

* 1.4.2. [Additional Configuration](#AdditionalConfiguration)

|

||||

* 1.4.3. [Security Considerations](#SecurityConsiderations)

|

||||

* 1.4.4. [Data Management](#DataManagement)

|

||||

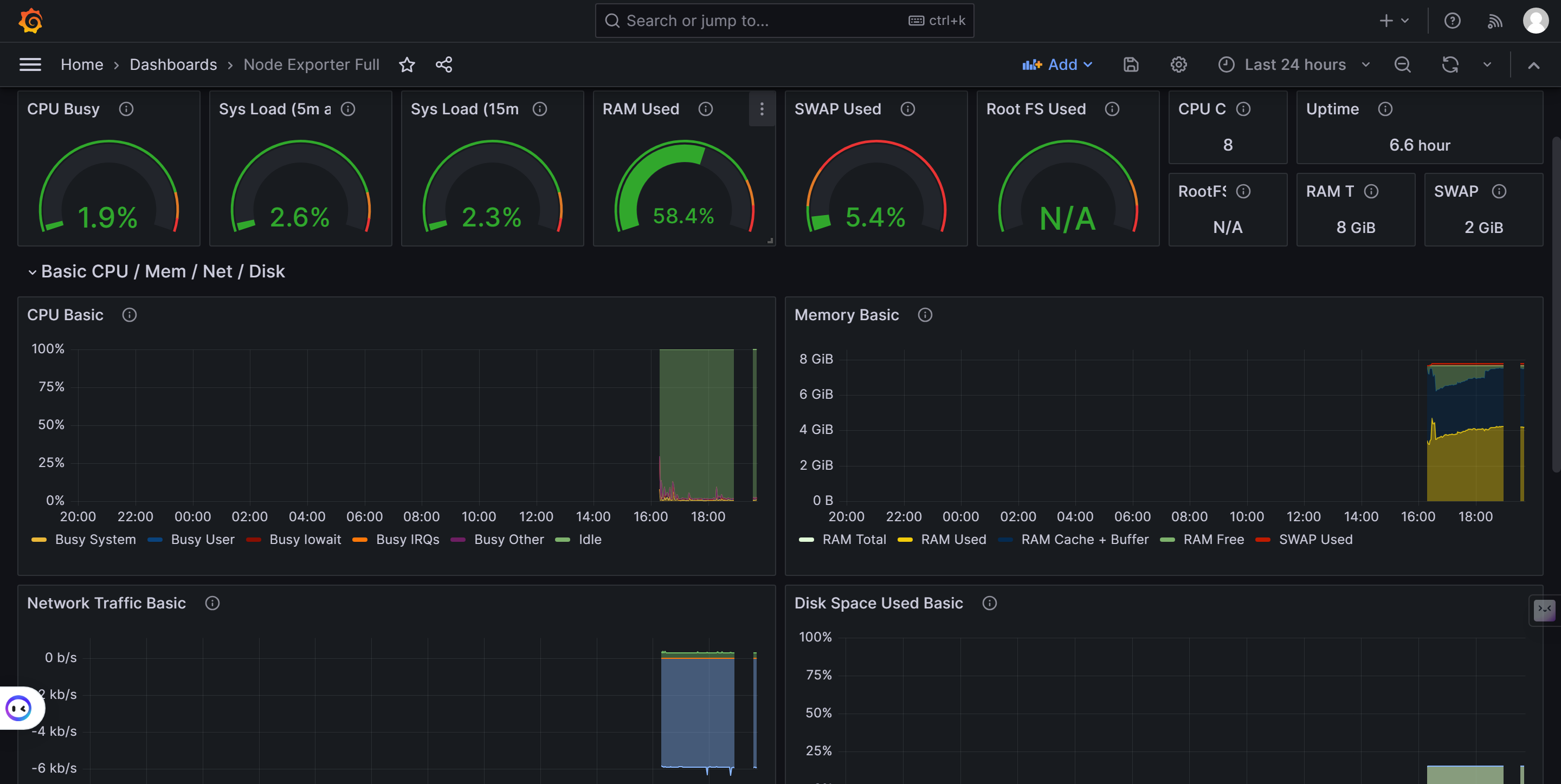

* 1.4.5. [Monitoring and Logging](#MonitoringandLogging)

|

||||

* 1.4.6. [Troubleshooting](#Troubleshooting)

|

||||

* 1.4.7. [Conclusion](#Conclusion)

|

||||

* 1.4.8. [Additional Resources](#AdditionalResources)

|

||||

* 2. [Further Configuration](#FurtherConfiguration)

|

||||

* 2.1. [Image Registry Configuration](#ImageRegistryConfiguration)

|

||||

* 2.2. [OpenIM Docker Network Configuration](#OpenIMDockerNetworkConfiguration)

|

||||

* 2.3. [OpenIM Configuration](#OpenIMConfiguration)

|

||||

* 2.4. [OpenIM Chat Configuration](#OpenIMChatConfiguration)

|

||||

* 2.5. [Zookeeper Configuration](#ZookeeperConfiguration)

|

||||

* 2.6. [MySQL Configuration](#MySQLConfiguration)

|

||||

* 2.7. [MongoDB Configuration](#MongoDBConfiguration)

|

||||

* 2.8. [Tencent Cloud COS Configuration](#TencentCloudCOSConfiguration)

|

||||

* 2.9. [Alibaba Cloud OSS Configuration](#AlibabaCloudOSSConfiguration)

|

||||

* 2.10. [Redis Configuration](#RedisConfiguration)

|

||||

* 2.11. [Kafka Configuration](#KafkaConfiguration)

|

||||

* 2.12. [OpenIM Web Configuration](#OpenIMWebConfiguration)

|

||||

* 2.13. [RPC Configuration](#RPCConfiguration)

|

||||

* 2.14. [Prometheus Configuration](#PrometheusConfiguration)

|

||||

* 2.15. [Grafana Configuration](#GrafanaConfiguration)

|

||||

* 2.16. [RPC Port Configuration Variables](#RPCPortConfigurationVariables)

|

||||

* 2.17. [RPC Register Name Configuration](#RPCRegisterNameConfiguration)

|

||||

* 2.18. [Log Configuration](#LogConfiguration)

|

||||

* 2.19. [Additional Configuration Variables](#AdditionalConfigurationVariables)

|

||||

* 2.20. [Prometheus Configuration](#PrometheusConfiguration-1)

|

||||

* 2.20.1. [General Configuration](#GeneralConfiguration)

|

||||

* 2.20.2. [Service-Specific Prometheus Ports](#Service-SpecificPrometheusPorts)

|

||||

* 2.21. [Qiniu Cloud Kodo Configuration](#QiniuCloudKODOConfiguration)

|

||||

|

||||

## 0. <a name='TableofContents'></a>OpenIM Config File

|

||||

|

||||

Ensuring that OpenIM operates smoothly requires clear direction on the configuration file's location. Here's a detailed step-by-step guide on how to provide this essential path to OpenIM:

|

||||

|

||||

1. **Using the Command-line Argument**:

|

||||

|

||||

+ **For Configuration Path**: When initializing OpenIM, you can specify the path to the configuration file directly using the `-c` or `--config_folder_path` option.

|

||||

|

||||

```bash

|

||||

❯ _output/bin/platforms/linux/amd64/openim-api --config_folder_path="/your/config/folder/path"

|

||||

```

|

||||

|

||||

+ **For Port Specification**: Similarly, if you wish to designate a particular port, utilize the `-p` option followed by the desired port number.

|

||||

|

||||

```bash

|

||||

❯ _output/bin/platforms/linux/amd64/openim-api -p 1234

|

||||

```

|

||||

|

||||

Note: If the port is not specified here, OpenIM will fetch it from the configuration file. Setting the port via environment variables isn't supported. We recommend consolidating settings in the configuration file for a more consistent and streamlined setup.

|

||||

|

||||

2. **Leveraging the Environment Variable**:

|

||||

|

||||

You have the flexibility to determine OpenIM's configuration path by setting an `OPENIMCONFIG` environment variable. This method provides a seamless way to instruct OpenIM without command-line parameters every time.

|

||||

|

||||

```bash

|

||||

export OPENIMCONFIG="/path/to/your/config"

|

||||

```

|

||||

|

||||

3. **Relying on the Default Path**:

|

||||

|

||||

In scenarios where neither command-line arguments nor environment variables are provided, OpenIM will intuitively revert to the `config/` directory to locate its configuration.

|

||||

|

||||

|

||||

|

||||

## 1. <a name='OpenIMDeploymentGuide'></a>OpenIM Deployment Guide

|

||||

|

||||

Welcome to the OpenIM Deployment Guide! OpenIM offers a versatile and robust instant messaging server, and deploying it can be achieved through various methods. This guide will walk you through the primary deployment strategies, ensuring you can set up OpenIM in a way that best suits your needs.

|

||||

|

||||

### 1.1. <a name='DeploymentStrategies'></a>Deployment Strategies

|

||||

|

||||

OpenIM provides multiple deployment methods, each tailored to different use cases and technical preferences:

|

||||

|

||||

1. **[Source Code Deployment Guide](https://doc.rentsoft.cn/guides/gettingStarted/imSourceCodeDeployment)**

|

||||

2. **[Docker Deployment Guide](https://doc.rentsoft.cn/guides/gettingStarted/dockerCompose)**

|

||||

3. **[Kubernetes Deployment Guide](https://github.com/openimsdk/open-im-server-deploy/tree/main/deployments)**

|

||||

|

||||

While the first two methods will be our main focus, it's worth noting that the third method, Kubernetes deployment, is also viable and can be rendered via the `environment.sh` script variables.

|

||||

|

||||

### 1.2. <a name='SourceCodeDeployment'></a>Source Code Deployment

|

||||

|

||||

In the source code deployment method, the configuration generation process involves executing `make init`, which fundamentally runs the script `./scripts/init-config.sh`. This script utilizes variables defined in the [`environment.sh`](https://github.com/openimsdk/open-im-server-deploy/blob/main/scripts/install/environment.sh) script to render the [`config.yaml`](https://github.com/openimsdk/open-im-server-deploy/blob/main/deployments/templates/config.yaml) template file, subsequently generating the [`config.yaml`](https://github.com/openimsdk/open-im-server-deploy/blob/main/config/config.yaml) configuration file.

|

||||

|

||||

### 1.3. <a name='DockerComposeDeployment'></a>Docker Compose Deployment

|

||||

|

||||

Docker deployment offers a slightly more intricate template. Within the [openim-server](https://github.com/openimsdk/openim-docker/tree/main/openim-server) directory, multiple subdirectories correspond to various versions, each aligning with `openim-chat` as illustrated below:

|

||||

|

||||

| openim-server | openim-chat |

|

||||

| ------------------------------------------------------------ | ------------------------------------------------------------ |

|

||||

| [main](https://github.com/openimsdk/openim-docker/tree/main/openim-server/main) | [main](https://github.com/openimsdk/openim-docker/tree/main/openim-chat/main) |

|

||||

| [release-v3.2](https://github.com/openimsdk/openim-docker/tree/main/openim-server/release-v3.3) | [release-v3.2](https://github.com/openimsdk/openim-docker/tree/main/openim-chat/release-v1.3) |

|

||||

| [release-v3.2](https://github.com/openimsdk/openim-docker/tree/main/openim-server/release-v3.2) | [release-v3.2](https://github.com/openimsdk/openim-docker/tree/main/openim-chat/release-v1.2) |

|

||||

|

||||

Configuration file modifications can be made by specifying corresponding environment variables, for instance:

|

||||

|

||||

```bash

|

||||

export CHAT_IMAGE_VERSION="main"

|

||||

export SERVER_IMAGE_VERSION="main"

|

||||

```

|

||||

|

||||

These variables are stored within the [`environment.sh`](https://github.com/OpenIMSDK/open-im-server-deploy/blob/main/scripts/install/environment.sh) configuration:

|

||||

|

||||

```bash

|

||||

readonly CHAT_IMAGE_VERSION=${CHAT_IMAGE_VERSION:-'main'}

|

||||

readonly SERVER_IMAGE_VERSION=${SERVER_IMAGE_VERSION:-'main'}

|

||||

```

|

||||

> [!IMPORTANT]

|

||||

> Can learn to read our mirror version strategy: https://github.com/openimsdk/open-im-server-deploy/blob/main/docs/contrib/images.md

|

||||

|

||||

|

||||

Setting a variable, e.g., `export CHAT_IMAGE_VERSION="release-v1.3"`, will prioritize `CHAT_IMAGE_VERSION="release-v1.3"` as the variable value. Ultimately, the chosen image version is determined, and rendering is achieved through `make init` (or `./scripts/init-config.sh`).

|

||||

|

||||

> Note: Direct modifications to the `config.yaml` file are also permissible without utilizing `make init`.

|

||||

|

||||

### 1.4. <a name='EnvironmentVariableConfiguration'></a>Environment Variable Configuration

|

||||

|

||||

For convenience, configuration through modifying environment variables is recommended:

|

||||

|

||||

#### 1.4.1. <a name='Recommendedusingenvironmentvariables'></a>Recommended using environment variables

|

||||

|

||||

+ PASSWORD

|

||||

|

||||

+ **Description**: Password for mongodb, redis, and minio.

|

||||

+ **Default**: `openIM123`

|

||||

+ Notes:

|

||||

+ Minimum password length: 8 characters.

|

||||

+ Special characters are not allowed.

|

||||

|

||||

```bash

|

||||

export PASSWORD="openIM123"

|

||||

```

|

||||

|

||||

+ OPENIM_USER

|

||||

|

||||

+ **Description**: Username for redis, and minio.

|

||||

+ **Default**: `root`

|

||||

|

||||

```bash

|

||||

export OPENIM_USER="root"

|

||||

```

|

||||

|

||||

> mongo is `openIM`, use `export MONGO_OPENIM_USERNAME="openIM"` to modify

|

||||

|

||||

+ OPENIM_IP

|

||||

|

||||

+ **Description**: API address.

|

||||

+ **Note**: If the server has an external IP, it will be automatically obtained. For internal networks, set this variable to the IP serving internally.

|

||||

|

||||

```bash

|

||||

export OPENIM_IP="ip"

|

||||

```

|

||||

|

||||

+ DATA_DIR

|

||||

|

||||

+ **Description**: Data mount directory for components.

|

||||

+ **Default**: `/data/openim`

|

||||

|

||||

```bash

|

||||

export DATA_DIR="/data/openim"

|

||||

```

|

||||

|

||||

#### 1.4.2. <a name='AdditionalConfiguration'></a>Additional Configuration

|

||||

|

||||

##### MinIO Access and Secret Key

|

||||

|

||||

To secure your MinIO server, you should set up an access key and secret key. These credentials are used to authenticate requests to your MinIO server.

|

||||

|

||||

```bash

|

||||

export MINIO_ACCESS_KEY="YourAccessKey"

|

||||

export MINIO_SECRET_KEY="YourSecretKey"

|

||||

```

|

||||

|

||||

##### MinIO Browser

|

||||

|

||||

MinIO comes with an embedded web-based object browser. You can control the availability of the MinIO browser by setting the `MINIO_BROWSER` environment variable.

|

||||

|

||||

```bash

|

||||

export MINIO_BROWSER="on"

|

||||

```

|

||||

|

||||

#### 1.4.3. <a name='SecurityConsiderations'></a>Security Considerations

|

||||

|

||||

##### TLS/SSL Configuration

|

||||

|

||||

For secure communication, it's recommended to enable TLS/SSL for your MinIO server. You can do this by providing the path to the SSL certificate and key files.

|

||||

|

||||

```bash

|

||||

export MINIO_CERTS_DIR="/path/to/certs/directory"

|

||||

```

|

||||

|

||||

#### 1.4.4. <a name='DataManagement'></a>Data Management

|

||||

|

||||

##### Data Retention Policy

|

||||

|

||||

You may want to set up a data retention policy to automatically delete objects after a specified period.

|

||||

|

||||

```bash

|

||||

export MINIO_RETENTION_DAYS="30"

|

||||

```

|

||||

|

||||

#### 1.4.5. <a name='MonitoringandLogging'></a>Monitoring and Logging

|

||||

|

||||

##### [Audit Logging](https://github.com/openimsdk/open-im-server-deploy/blob/main/docs/contrib/environment.md#audit-logging)

|

||||

|

||||

Enable audit logging to keep track of access and changes to your data.

|

||||

|

||||

```bash

|

||||

export MINIO_AUDIT="on"

|

||||

```

|

||||

|

||||

#### 1.4.6. <a name='Troubleshooting'></a>Troubleshooting

|

||||

|

||||

##### Debug Mode

|

||||

|

||||

In case of issues, you may enable debug mode to get more detailed logs to assist in troubleshooting.

|

||||

|

||||

```bash

|

||||

export MINIO_DEBUG="on"

|

||||

```

|

||||

|

||||

#### 1.4.7. <a name='Conclusion'></a>Conclusion

|

||||

|

||||

With the environment variables configured as per your requirements, your MinIO server should be ready to securely store and manage your object data. Ensure to verify the setup and monitor the logs for any unusual activities or errors. Regularly update the MinIO server and review your configuration to adapt to any changes or improvements in the MinIO system.

|

||||

|

||||

#### 1.4.8. <a name='AdditionalResources'></a>Additional Resources

|

||||

|

||||

+ [MinIO Client Quickstart Guide](https://docs.min.io/docs/minio-client-quickstart-guide)

|

||||

+ [MinIO Admin Complete Guide](https://docs.min.io/docs/minio-admin-complete-guide)

|

||||

+ [MinIO Docker Quickstart Guide](https://docs.min.io/docs/minio-docker-quickstart-guide)

|

||||

|

||||

Feel free to explore the MinIO documentation for more advanced configurations and usage scenarios.

|

||||

|

||||

|

||||

|

||||

## 2. <a name='FurtherConfiguration'></a>Further Configuration

|

||||

|

||||

### 2.1. <a name='ImageRegistryConfiguration'></a>Image Registry Configuration

|

||||

|

||||

**Description**: The image registry configuration allows users to select an image address for use. The default is set to use GITHUB images, but users can opt for Docker Hub or Ali Cloud, especially beneficial for Chinese users due to its local proximity.

|

||||

|

||||

| Parameter | Default Value | Description |

|

||||

| ---------------- | --------------------- | ------------------------------------------------------------ |

|

||||

| `IMAGE_REGISTRY` | `"ghcr.io/openimsdk"` | The registry from which Docker images will be pulled. Other options include `"openim"` and `"registry.cn-hangzhou.aliyuncs.com/openimsdk"`. |

|

||||

|

||||

### 2.2. <a name='OpenIMDockerNetworkConfiguration'></a>OpenIM Docker Network Configuration

|

||||

|

||||

**Description**: This section configures the Docker network subnet and generates IP addresses for various services within the defined subnet.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------------------- | ----------------- | ------------------------------------------------------------ |

|

||||

| `DOCKER_BRIDGE_SUBNET` | `'172.28.0.0/16'` | The subnet for the Docker network. |

|

||||

| `DOCKER_BRIDGE_GATEWAY` | Generated IP | The gateway IP address within the Docker subnet. |

|

||||

| `[SERVICE]_NETWORK_ADDRESS` | Generated IP | The network IP address for a specific service (e.g., MYSQL, MONGO, REDIS, etc.) within the Docker subnet. |

|

||||

|

||||

### 2.3. <a name='OpenIMConfiguration'></a>OpenIM Configuration

|

||||

|

||||

**Description**: OpenIM configuration involves setting up directories for data, installation, configuration, and logs. It also involves configuring the OpenIM server address and ports for WebSocket and API.

|

||||

|

||||

| Parameter | Default Value | Description |

|

||||

| ----------------------- | ------------------------ | ----------------------------------------- |

|

||||

| `OPENIM_DATA_DIR` | `"/data/openim"` | Directory for OpenIM data. |

|

||||

| `OPENIM_INSTALL_DIR` | `"/opt/openim"` | Directory where OpenIM is installed. |

|

||||

| `OPENIM_CONFIG_DIR` | `"/etc/openim"` | Directory for OpenIM configuration files. |

|

||||

| `OPENIM_LOG_DIR` | `"/var/log/openim"` | Directory for OpenIM logs. |

|

||||

| `OPENIM_SERVER_ADDRESS` | Docker Bridge Gateway IP | OpenIM server address. |

|

||||

| `OPENIM_WS_PORT` | `'10001'` | Port for OpenIM WebSocket. |

|

||||

| `API_OPENIM_PORT` | `'10002'` | Port for OpenIM API. |

|

||||

|

||||

### 2.4. <a name='OpenIMChatConfiguration'></a>OpenIM Chat Configuration

|

||||

|

||||

**Description**: Configuration for OpenIM chat, including data directory, server address, and ports for API and chat functionalities.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| ----------------------- | -------------------------- | ------------------------------- |

|

||||

| `OPENIM_CHAT_DATA_DIR` | `"./openim-chat/[BRANCH]"` | Directory for OpenIM chat data. |

|

||||

| `OPENIM_CHAT_ADDRESS` | Docker Bridge Gateway IP | OpenIM chat service address. |

|

||||

| `OPENIM_CHAT_API_PORT` | `"10008"` | Port for OpenIM chat API. |

|

||||

| `OPENIM_ADMIN_API_PORT` | `"10009"` | Port for OpenIM Admin API. |

|

||||

| `OPENIM_ADMIN_PORT` | `"30200"` | Port for OpenIM chat Admin. |

|

||||

| `OPENIM_CHAT_PORT` | `"30300"` | Port for OpenIM chat. |

|

||||

|

||||

### 2.5. <a name='ZookeeperConfiguration'></a>Zookeeper Configuration

|

||||

|

||||

**Description**: Configuration for Zookeeper, including schema, port, address, and credentials.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| -------------------- | ------------------------ | ----------------------- |

|

||||

| `ZOOKEEPER_SCHEMA` | `"openim"` | Schema for Zookeeper. |

|

||||

| `ZOOKEEPER_PORT` | `"12181"` | Port for Zookeeper. |

|

||||

| `ZOOKEEPER_ADDRESS` | Docker Bridge Gateway IP | Address for Zookeeper. |

|

||||

| `ZOOKEEPER_USERNAME` | `""` | Username for Zookeeper. |

|

||||

| `ZOOKEEPER_PASSWORD` | `""` | Password for Zookeeper. |

|

||||

|

||||

### 2.7. <a name='MongoDBConfiguration'></a>MongoDB Configuration

|

||||

|

||||

This section involves setting up MongoDB, including its port, address, and credentials.

|

||||

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| -------------- | -------------- | ----------------------- |

|

||||

| MONGO_PORT | "27017" | Port used by MongoDB. |

|

||||

| MONGO_ADDRESS | [Generated IP] | IP address for MongoDB. |

|

||||

| MONGO_USERNAME | [User Defined] | Admin Username for MongoDB. |

|

||||

| MONGO_PASSWORD | [User Defined] | Admin Password for MongoDB. |

|

||||

| MONGO_OPENIM_USERNAME | [User Defined] | OpenIM Username for MongoDB. |

|

||||

| MONGO_OPENIM_PASSWORD | [User Defined] | OpenIM Password for MongoDB. |

|

||||

|

||||

### 2.8. <a name='TencentCloudCOSConfiguration'></a>Tencent Cloud COS Configuration

|

||||

|

||||

This section involves setting up Tencent Cloud COS, including its bucket URL and credentials.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| ----------------- | ------------------------------------------------------------ | ------------------------------------ |

|

||||

| COS_BUCKET_URL | "[https://temp-1252357374.cos.ap-chengdu.myqcloud.com](https://temp-1252357374.cos.ap-chengdu.myqcloud.com/)" | Tencent Cloud COS bucket URL. |

|

||||

| COS_SECRET_ID | [User Defined] | Secret ID for Tencent Cloud COS. |

|

||||

| COS_SECRET_KEY | [User Defined] | Secret key for Tencent Cloud COS. |

|

||||

| COS_SESSION_TOKEN | [User Defined] | Session token for Tencent Cloud COS. |

|

||||

| COS_PUBLIC_READ | "false" | Public read access. |

|

||||

|

||||

### 2.9. <a name='AlibabaCloudOSSConfiguration'></a>Alibaba Cloud OSS Configuration

|

||||

|

||||

This section involves setting up Alibaba Cloud OSS, including its endpoint, bucket name, and credentials.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------------- | ------------------------------------------------------------ | ---------------------------------------- |

|

||||

| OSS_ENDPOINT | "[https://oss-cn-chengdu.aliyuncs.com](https://oss-cn-chengdu.aliyuncs.com/)" | Endpoint URL for Alibaba Cloud OSS. |

|

||||

| OSS_BUCKET | "demo-9999999" | Bucket name for Alibaba Cloud OSS. |

|

||||

| OSS_BUCKET_URL | "[https://demo-9999999.oss-cn-chengdu.aliyuncs.com](https://demo-9999999.oss-cn-chengdu.aliyuncs.com/)" | Bucket URL for Alibaba Cloud OSS. |

|

||||

| OSS_ACCESS_KEY_ID | [User Defined] | Access key ID for Alibaba Cloud OSS. |

|

||||

| OSS_ACCESS_KEY_SECRET | [User Defined] | Access key secret for Alibaba Cloud OSS. |

|

||||

| OSS_SESSION_TOKEN | [User Defined] | Session token for Alibaba Cloud OSS. |

|

||||

| OSS_PUBLIC_READ | "false" | Public read access. |

|

||||

|

||||

### 2.10. <a name='RedisConfiguration'></a>Redis Configuration

|

||||

|

||||

This section involves setting up Redis, including its port, address, and credentials.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| -------------- | -------------------------- | --------------------- |

|

||||

| REDIS_PORT | "16379" | Port used by Redis. |

|

||||

| REDIS_ADDRESS | "${DOCKER_BRIDGE_GATEWAY}" | IP address for Redis. |

|

||||

| REDIS_USERNAME | [User Defined] | Username for Redis. |

|

||||

| REDIS_PASSWORD | "${PASSWORD}" | Password for Redis. |

|

||||

|

||||

### 2.11. <a name='KafkaConfiguration'></a>Kafka Configuration

|

||||

|

||||

This section involves setting up Kafka, including its port, address, credentials, and topics.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| ---------------------------- | -------------------------- | ----------------------------------- |

|

||||

| KAFKA_USERNAME | [User Defined] | Username for Kafka. |

|

||||

| KAFKA_PASSWORD | [User Defined] | Password for Kafka. |

|

||||

| KAFKA_PORT | "19094" | Port used by Kafka. |

|

||||

| KAFKA_ADDRESS | "${DOCKER_BRIDGE_GATEWAY}" | IP address for Kafka. |

|

||||

| KAFKA_LATESTMSG_REDIS_TOPIC | "latestMsgToRedis" | Topic for latest message to Redis. |

|

||||

| KAFKA_OFFLINEMSG_MONGO_TOPIC | "offlineMsgToMongoMysql" | Topic for offline message to Mongo. |

|

||||

| KAFKA_MSG_PUSH_TOPIC | "msgToPush" | Topic for message to push. |

|

||||

| KAFKA_CONSUMERGROUPID_REDIS | "redis" | Consumer group ID to Redis. |

|

||||

| KAFKA_CONSUMERGROUPID_MONGO | "mongo" | Consumer group ID to Mongo. |

|

||||

| KAFKA_CONSUMERGROUPID_MYSQL | "mysql" | Consumer group ID to MySQL. |

|

||||

| KAFKA_CONSUMERGROUPID_PUSH | "push" | Consumer group ID to push. |

|

||||

|

||||

Note: Ensure to replace placeholder values (like [User Defined], `${DOCKER_BRIDGE_GATEWAY}`, and `${PASSWORD}`) with actual values before deploying the configuration.

|

||||

|

||||

|

||||

|

||||

### 2.12. <a name='OpenIMWebConfiguration'></a>OpenIM Web Configuration

|

||||

|

||||

This section involves setting up OpenIM Web, including its port, address, and dist path.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| -------------------- | -------------------------- | ------------------------- |

|

||||

| OPENIM_WEB_PORT | "11001" | Port used by OpenIM Web. |

|

||||

| OPENIM_WEB_ADDRESS | "${DOCKER_BRIDGE_GATEWAY}" | Address for OpenIM Web. |

|

||||

| OPENIM_WEB_DIST_PATH | "/app/dist" | Dist path for OpenIM Web. |

|

||||

|

||||

### 2.13. <a name='RPCConfiguration'></a>RPC Configuration

|

||||

|

||||

Configuration for RPC, including the register and listen IP.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------- | -------------- | -------------------- |

|

||||

| RPC_REGISTER_IP | [User Defined] | Register IP for RPC. |

|

||||

| RPC_LISTEN_IP | "0.0.0.0" | Listen IP for RPC. |

|

||||

|

||||

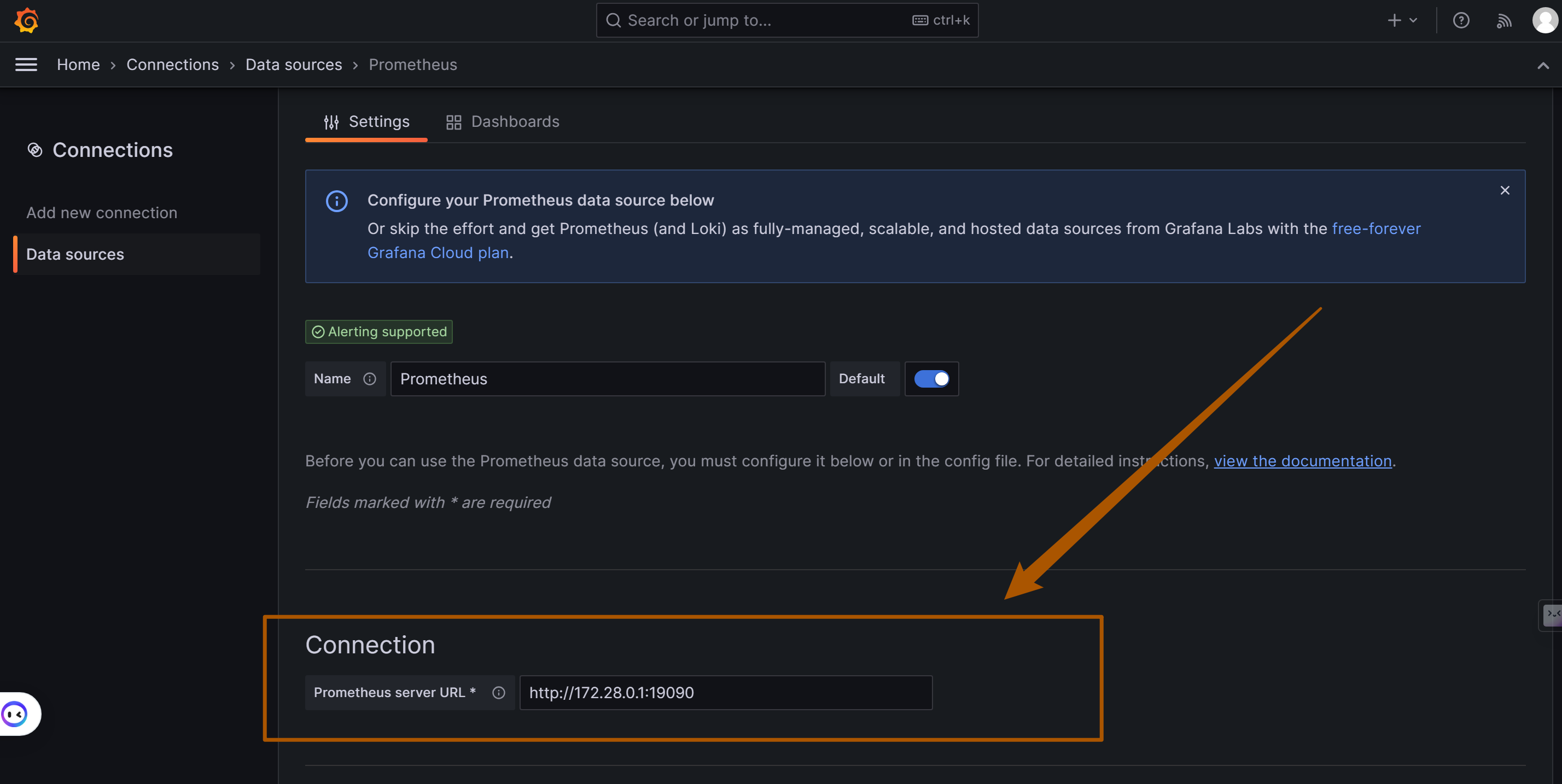

### 2.14. <a name='PrometheusConfiguration'></a>Prometheus Configuration

|

||||

|

||||

Setting up Prometheus, including its port and address.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| ------------------ | -------------------------- | ------------------------ |

|

||||

| PROMETHEUS_PORT | "19090" | Port used by Prometheus. |

|

||||

| PROMETHEUS_ADDRESS | "${DOCKER_BRIDGE_GATEWAY}" | Address for Prometheus. |

|

||||

|

||||

### 2.15. <a name='GrafanaConfiguration'></a>Grafana Configuration

|

||||

|

||||

Configuration for Grafana, including its port and address.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------- | -------------------------- | --------------------- |

|

||||

| GRAFANA_PORT | "13000" | Port used by Grafana. |

|

||||

| GRAFANA_ADDRESS | "${DOCKER_BRIDGE_GATEWAY}" | Address for Grafana. |

|

||||

|

||||

### 2.16. <a name='RPCPortConfigurationVariables'></a>RPC Port Configuration Variables

|

||||

|

||||

Configuration for various RPC ports. Note: For launching multiple programs, just fill in multiple ports separated by commas. Try not to have spaces.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------------------- | ------------- | ----------------------------------- |

|

||||

| OPENIM_USER_PORT | '10110' | OpenIM User Service Port. |

|

||||

| OPENIM_FRIEND_PORT | '10120' | OpenIM Friend Service Port. |

|

||||

| OPENIM_MESSAGE_PORT | '10130' | OpenIM Message Service Port. |

|

||||

| OPENIM_MESSAGE_GATEWAY_PORT | '10140' | OpenIM Message Gateway Service Port |

|

||||

| OPENIM_GROUP_PORT | '10150' | OpenIM Group Service Port. |

|

||||

| OPENIM_AUTH_PORT | '10160' | OpenIM Authorization Service Port. |

|

||||

| OPENIM_PUSH_PORT | '10170' | OpenIM Push Service Port. |

|

||||

| OPENIM_CONVERSATION_PORT | '10180' | OpenIM Conversation Service Port. |

|

||||

| OPENIM_THIRD_PORT | '10190' | OpenIM Third-Party Service Port. |

|

||||

|

||||

### 2.17. <a name='RPCRegisterNameConfiguration'></a>RPC Register Name Configuration

|

||||

|

||||

This section involves setting up the RPC Register Names for various OpenIM services.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------------------- | ---------------- | ----------------------------------- |

|

||||

| OPENIM_USER_NAME | "User" | OpenIM User Service Name |

|

||||

| OPENIM_FRIEND_NAME | "Friend" | OpenIM Friend Service Name |

|

||||

| OPENIM_MSG_NAME | "Msg" | OpenIM Message Service Name |

|

||||

| OPENIM_PUSH_NAME | "Push" | OpenIM Push Service Name |

|

||||

| OPENIM_MESSAGE_GATEWAY_NAME | "MessageGateway" | OpenIM Message Gateway Service Name |

|

||||

| OPENIM_GROUP_NAME | "Group" | OpenIM Group Service Name |

|

||||

| OPENIM_AUTH_NAME | "Auth" | OpenIM Authorization Service Name |

|

||||

| OPENIM_CONVERSATION_NAME | "Conversation" | OpenIM Conversation Service Name |

|

||||

| OPENIM_THIRD_NAME | "Third" | OpenIM Third-Party Service Name |

|

||||

|

||||

### 2.18. <a name='LogConfiguration'></a>Log Configuration

|

||||

|

||||

This section involves configuring the log settings, including storage location, rotation time, and log level.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| ------------------------- | ------------------------ | --------------------------------- |

|

||||

| LOG_STORAGE_LOCATION | "${OPENIM_ROOT}/_output/logs/" | Location for storing logs |

|

||||

| LOG_ROTATION_TIME | "24" | Log rotation time (in hours) |

|

||||

| LOG_REMAIN_ROTATION_COUNT | "2" | Number of log rotations to retain |

|

||||

| LOG_REMAIN_LOG_LEVEL | "6" | Log level to retain |

|

||||

| LOG_IS_STDOUT | "false" | Output log to standard output |

|

||||

| LOG_IS_JSON | "false" | Log in JSON format |

|

||||

| LOG_WITH_STACK | "false" | Include stack info in logs |

|

||||

|

||||

### 2.19. <a name='AdditionalConfigurationVariables'></a>Additional Configuration Variables

|

||||

|

||||

This section involves setting up additional configuration variables for Websocket, Push Notifications, and Chat.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

|-------------------------|-------------------|----------------------------------|

|

||||

| WEBSOCKET_MAX_CONN_NUM | "100000" | Maximum Websocket connections |

|

||||

| WEBSOCKET_MAX_MSG_LEN | "4096" | Maximum Websocket message length |

|

||||

| WEBSOCKET_TIMEOUT | "10" | Websocket timeout |

|

||||

| PUSH_ENABLE | "getui" | Push notification enable status |

|

||||

| GETUI_PUSH_URL | [Generated URL] | GeTui Push Notification URL |

|

||||

| GETUI_MASTER_SECRET | [User Defined] | GeTui Master Secret |

|

||||

| GETUI_APP_KEY | [User Defined] | GeTui Application Key |

|

||||

| GETUI_INTENT | [User Defined] | GeTui Push Intent |

|

||||

| GETUI_CHANNEL_ID | [User Defined] | GeTui Channel ID |

|

||||

| GETUI_CHANNEL_NAME | [User Defined] | GeTui Channel Name |

|

||||

| FCM_SERVICE_ACCOUNT | "x.json" | FCM Service Account |

|

||||

| JPUSH_APP_KEY | [User Defined] | JPUSH Application Key |

|

||||

| JPUSH_MASTER_SECRET | [User Defined] | JPUSH Master Secret |

|

||||

| JPUSH_PUSH_URL | [User Defined] | JPUSH Push Notification URL |

|

||||

| JPUSH_PUSH_INTENT | [User Defined] | JPUSH Push Intent |

|

||||

| IM_ADMIN_USERID | "imAdmin" | IM Administrator ID |

|

||||

| IM_ADMIN_NAME | "imAdmin" | IM Administrator Nickname |

|

||||

| MULTILOGIN_POLICY | "1" | Multi-login Policy |

|

||||

| CHAT_PERSISTENCE_MYSQL | "true" | Chat Persistence in MySQL |

|

||||

| MSG_CACHE_TIMEOUT | "86400" | Message Cache Timeout |

|

||||

| GROUP_MSG_READ_RECEIPT | "true" | Group Message Read Receipt Enable |

|

||||

| SINGLE_MSG_READ_RECEIPT | "true" | Single Message Read Receipt Enable |

|

||||

| RETAIN_CHAT_RECORDS | "365" | Retain Chat Records (in days) |

|

||||

| CHAT_RECORDS_CLEAR_TIME | [Cron Expression] | Chat Records Clear Time |

|

||||

| MSG_DESTRUCT_TIME | [Cron Expression] | Message Destruct Time |

|

||||

| SECRET | "${PASSWORD}" | Secret Key |

|

||||

| TOKEN_EXPIRE | "90" | Token Expiry Time |

|

||||

| FRIEND_VERIFY | "false" | Friend Verification Enable |

|

||||

| IOS_PUSH_SOUND | "xxx" | iOS |

|

||||

| CALLBACK_ENABLE | "false" | Enable callback |

|

||||

| CALLBACK_TIMEOUT | "5" | Maximum timeout for callback call |

|

||||

| CALLBACK_FAILED_CONTINUE| "true" | fails to continue to the next step |

|

||||

### 2.20. <a name='PrometheusConfiguration-1'></a>Prometheus Configuration

|

||||

|

||||

This section involves configuring Prometheus, including enabling/disabling it and setting up ports for various services.

|

||||

|

||||

#### 2.20.1. <a name='GeneralConfiguration'></a>General Configuration

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| ------------------- | ------------- | ----------------------------- |

|

||||

| `PROMETHEUS_ENABLE` | "false" | Whether to enable Prometheus. |

|

||||

|

||||

#### 2.20.2. <a name='Service-SpecificPrometheusPorts'></a>Service-Specific Prometheus Ports

|

||||

|

||||

| Service | Parameter | Default Port Value | Description |

|

||||

| ------------------------ | ------------------------ | ---------------------------- | -------------------------------------------------- |

|

||||

| User Service | `USER_PROM_PORT` | '20110' | Prometheus port for the User service. |

|

||||

| Friend Service | `FRIEND_PROM_PORT` | '20120' | Prometheus port for the Friend service. |

|

||||

| Message Service | `MESSAGE_PROM_PORT` | '20130' | Prometheus port for the Message service. |

|

||||

| Message Gateway | `MSG_GATEWAY_PROM_PORT` | '20140' | Prometheus port for the Message Gateway. |

|

||||

| Group Service | `GROUP_PROM_PORT` | '20150' | Prometheus port for the Group service. |

|

||||

| Auth Service | `AUTH_PROM_PORT` | '20160' | Prometheus port for the Auth service. |

|

||||

| Push Service | `PUSH_PROM_PORT` | '20170' | Prometheus port for the Push service. |

|

||||

| Conversation Service | `CONVERSATION_PROM_PORT` | '20230' | Prometheus port for the Conversation service. |

|

||||

| RTC Service | `RTC_PROM_PORT` | '21300' | Prometheus port for the RTC service. |

|

||||

| Third Service | `THIRD_PROM_PORT` | '21301' | Prometheus port for the Third service. |

|

||||

| Message Transfer Service | `MSG_TRANSFER_PROM_PORT` | '21400, 21401, 21402, 21403' | Prometheus ports for the Message Transfer service. |

|

||||

|

||||

|

||||

### 2.21. <a name='QiniuCloudKODOConfiguration'></a>Qiniu Cloud Kodo Configuration

|

||||

|

||||

This section involves setting up Qiniu Cloud Kodo, including its endpoint, bucket name, and credentials.

|

||||

|

||||

| Parameter | Example Value | Description |

|

||||

| --------------------- | ------------------------------------------------------------ | ---------------------------------------- |

|

||||

| KODO_ENDPOINT | "[http://s3.cn-east-1.qiniucs.com](http://s3.cn-east-1.qiniucs.com)" | Endpoint URL for Qiniu Cloud Kodo. |

|

||||

| KODO_BUCKET | "demo-9999999" | Bucket name for Qiniu Cloud Kodo. |

|

||||

| KODO_BUCKET_URL | "[http://your.domain.com](http://your.domain.com)" | Bucket URL for Qiniu Cloud Kodo. |

|

||||

| KODO_ACCESS_KEY_ID | [User Defined] | Access key ID for Qiniu Cloud Kodo. |

|

||||

| KODO_ACCESS_KEY_SECRET | [User Defined] | Access key secret for Qiniu Cloud Kodo. |

|

||||

| KODO_SESSION_TOKEN | [User Defined] | Session token for Qiniu Cloud Kodo. |

|

||||

| KODO_PUBLIC_READ | "false" | Public read access. |

|

||||

22

docs/contrib/error-code.md

Normal file

22

docs/contrib/error-code.md

Normal file

@@ -0,0 +1,22 @@

|

||||

## Error Code Standards

|

||||

|

||||

Error codes are one of the important means for users to locate and solve problems. When an application encounters an exception, users can quickly locate and resolve the problem based on the error code and the description and solution of the error code in the documentation.

|

||||

|

||||

### Error Code Naming Standards

|

||||

|

||||

- Follow CamelCase notation;

|

||||

- Error codes are divided into two levels. For example, `InvalidParameter.BindError`, separated by a `.`. The first-level error code is platform-level, and the second-level error code is resource-level, which can be customized according to the scenario;

|

||||

- The second-level error code can only use English letters or numbers ([a-zA-Z0-9]), and should use standard English word spelling, standard abbreviations, RFC term abbreviations, etc.;

|

||||

- The error code should avoid multiple definitions of the same semantics, for example: `InvalidParameter.ErrorBind`, `InvalidParameter.BindError`.

|

||||

|

||||

### First-Level Common Error Codes

|

||||

|

||||

| Error Code | Error Description | Error Type |

|

||||